How to conveniently transfer data to and from FTP servers using DBSync

In this series of three articles we explain why it is advantageous to use DBSync to transfer data from an FTP server to Salesforce, and how to do it.

In the first part, we have seen how to create a connection between DBSync and Salesforce, and between DBSync and an FTP server. In this second article, we will see how to create a process, a workflow, a trigger, a rule and a mapping, and how to configure the post processing.

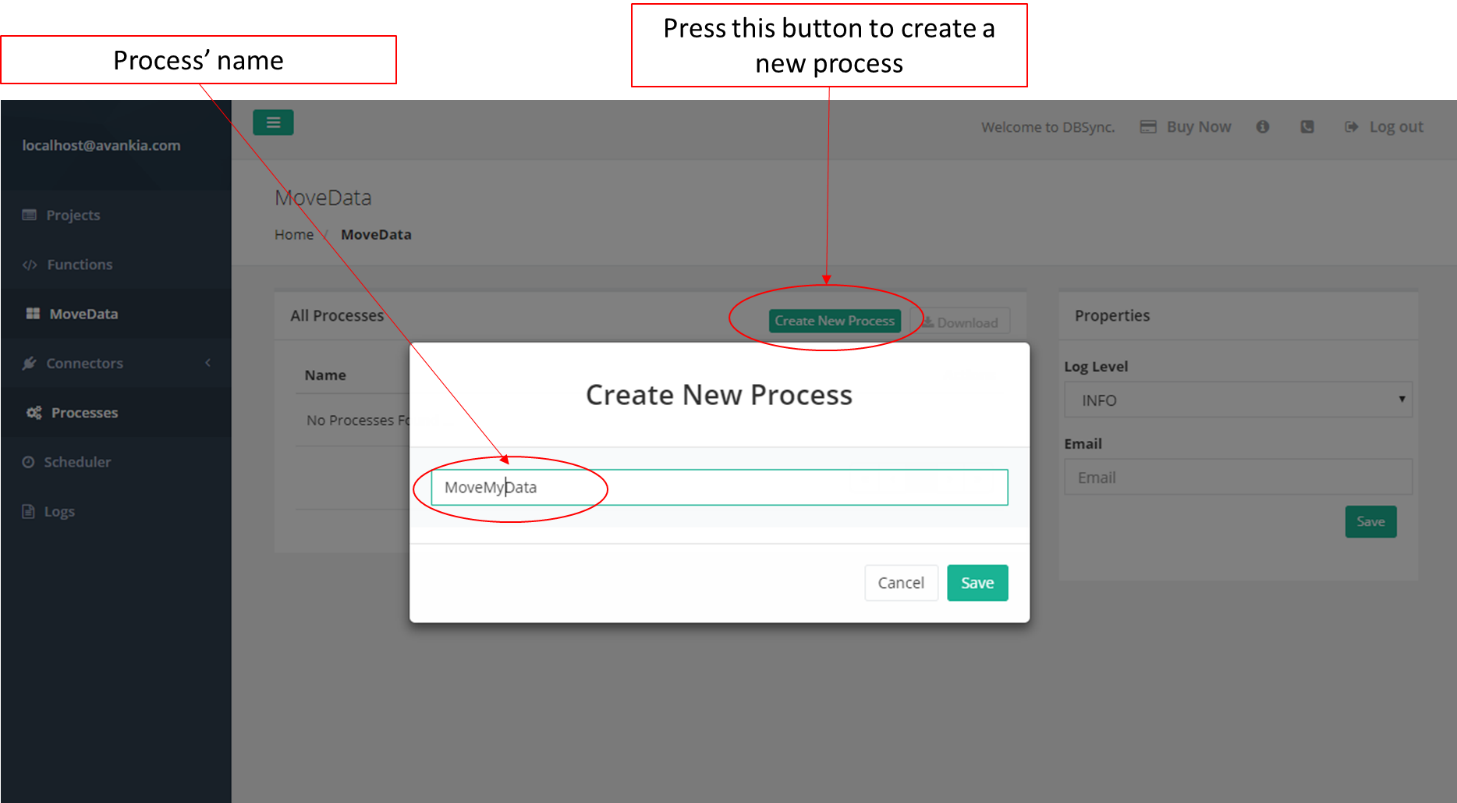

Step 4: Create a process

Once we are connected to the source and the destination, we need to create a process that defines the task we want to do. For this, we press the button Create New Process, and we input a name. In our example we use the name “MoveMyData”. We must remember that process names cannot contain spaces.

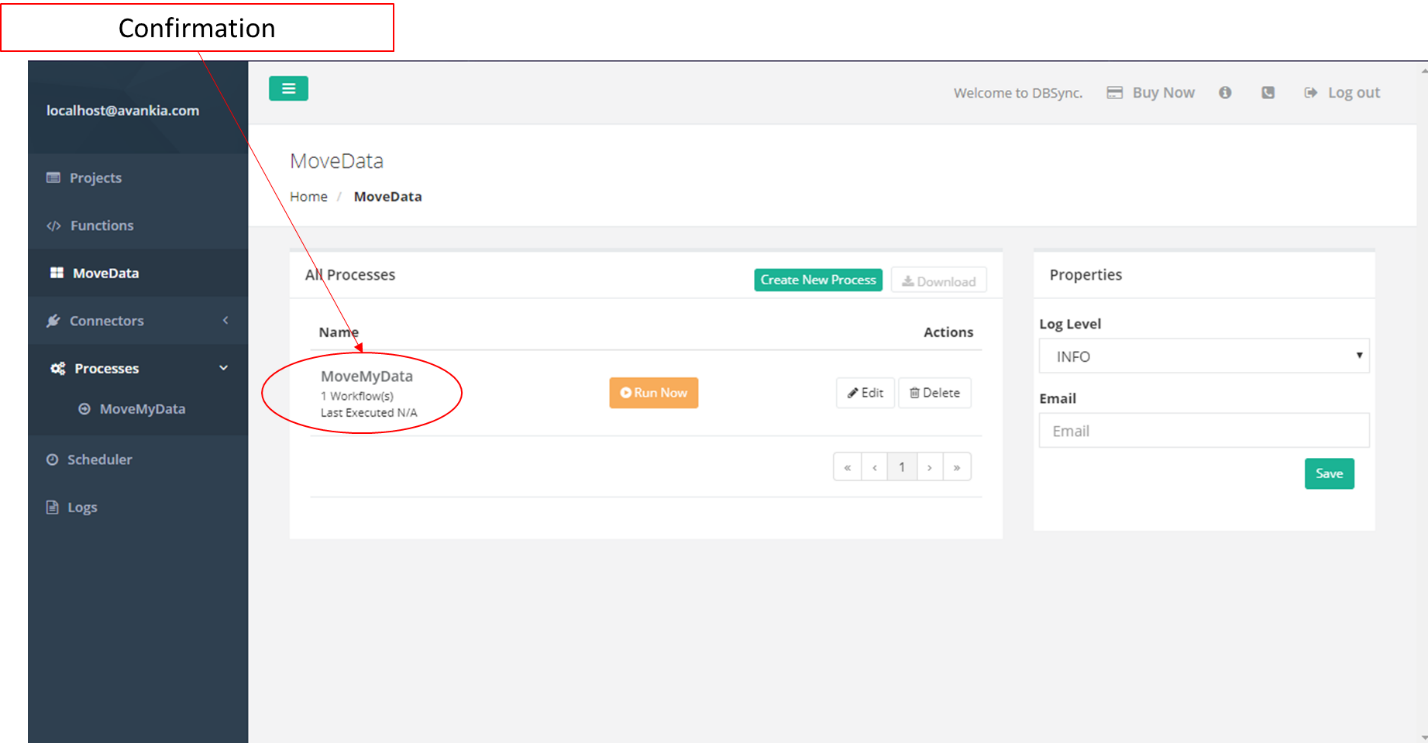

And we get a confirmation of the newly created process:

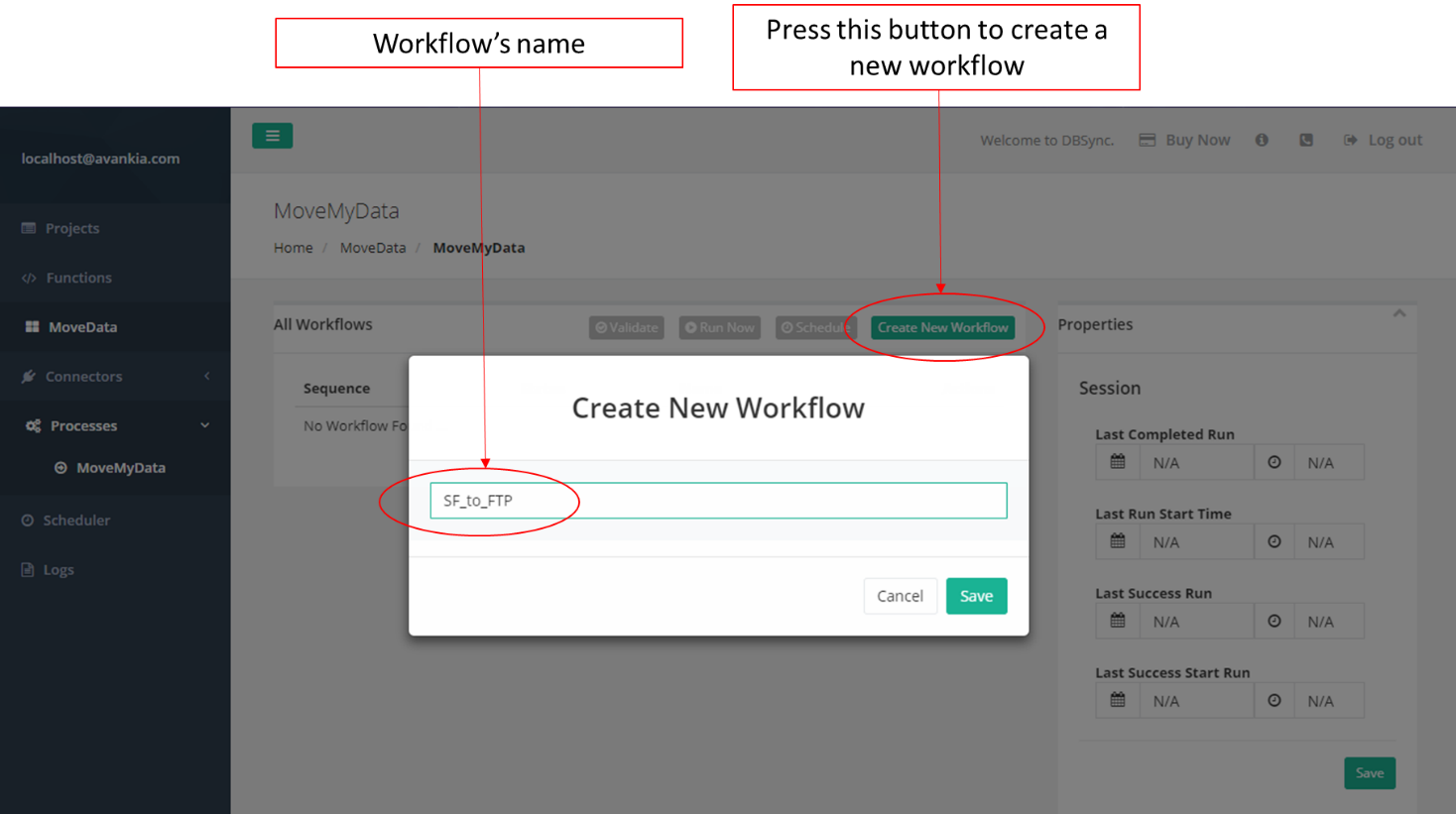

Step 5: Create a workflow.

A process contains one or more workflows. In order to create a new workflow, we must press the Create New Workflow button. The system will ask for a workflow name, which in our example is “SF_to_FTP” (figure 10). Once again, we must remember not to use empty spaces in our workflow name.

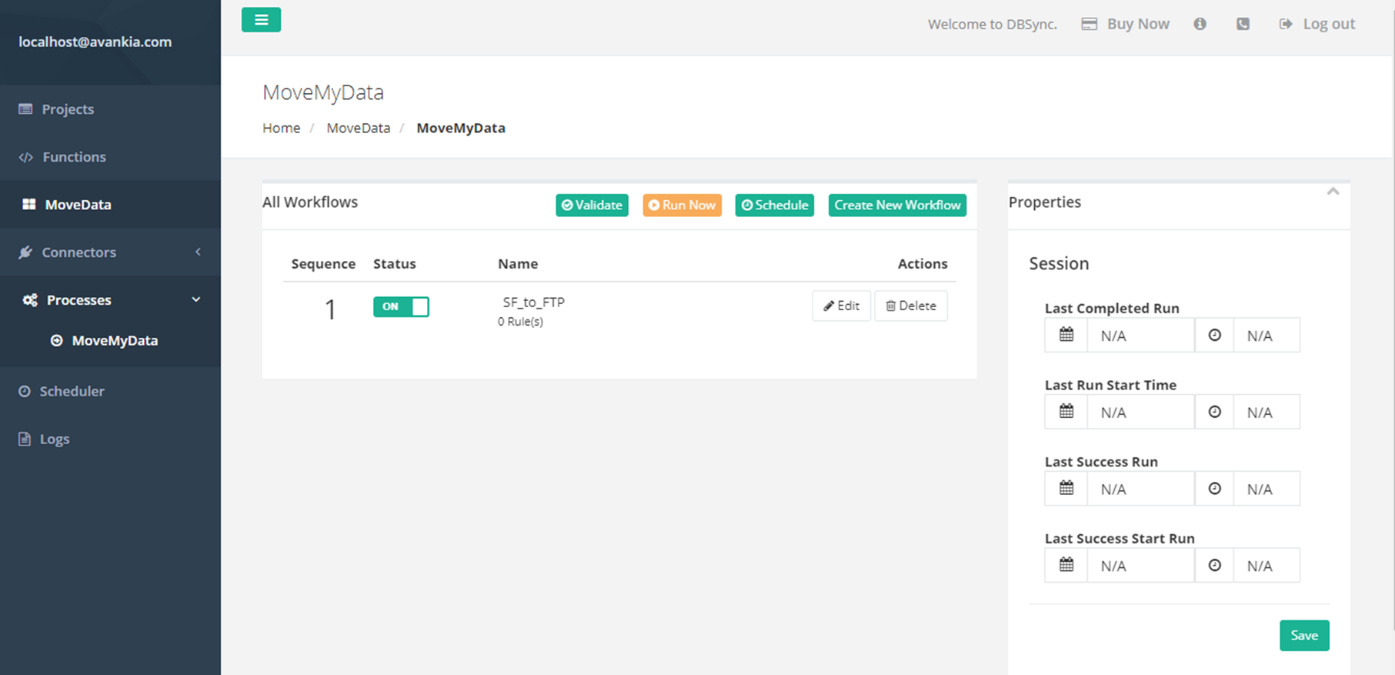

After pressing the button Save, we will be prompted to a screen that shows the newly created workflow.

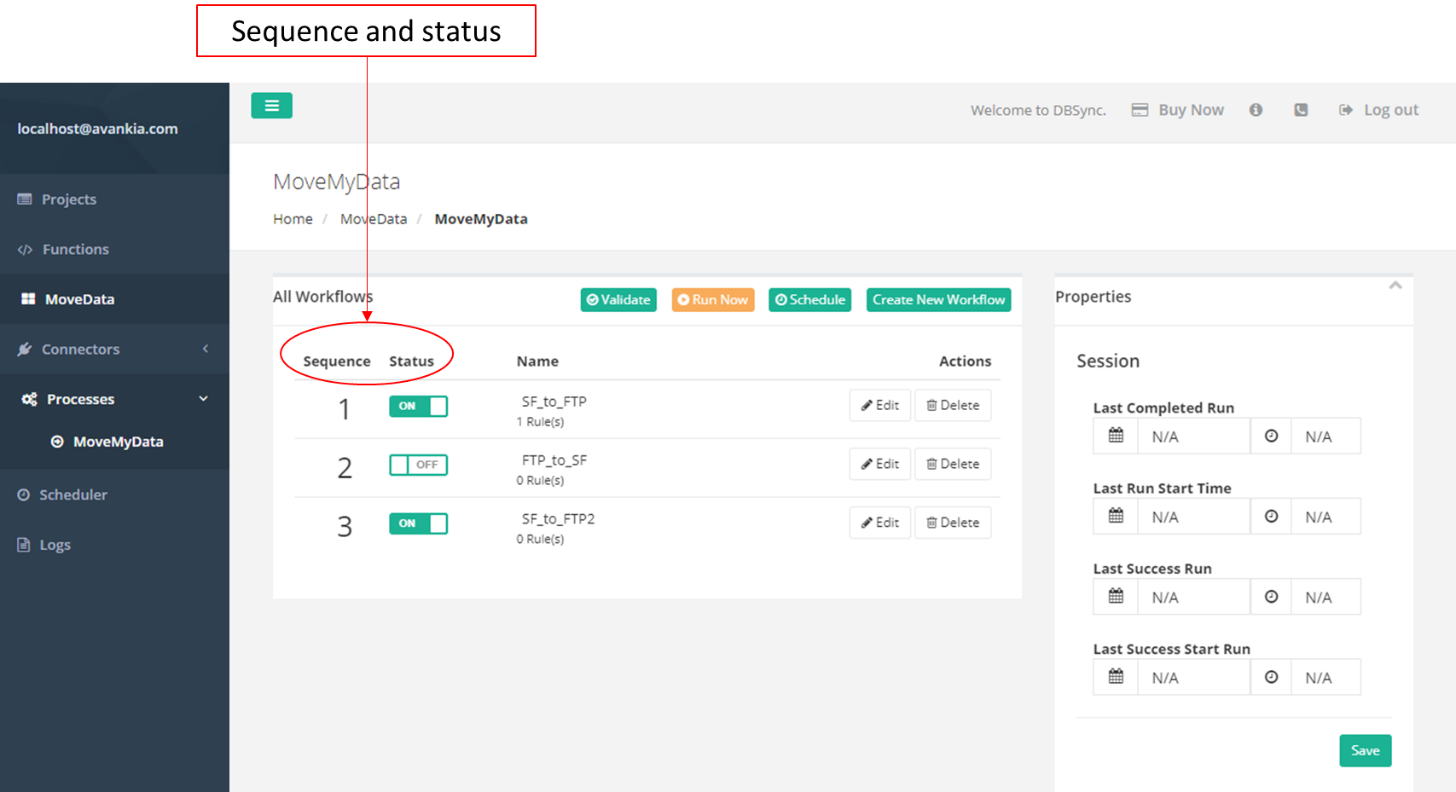

It is important to know that we can create more than one process. Those processes will run in sequence. In addition, each process has a status, which can be ON or OFF. If the status is OFF, the process will be ignored during the run.

For example, in figure 13, we have defined three process. Process number two has its status set to OFF. As a consequence, when we run the sequence, the order will be: process 1 followed by process 3. Process 2 will be ignored.

Step 6: Configure the trigger.

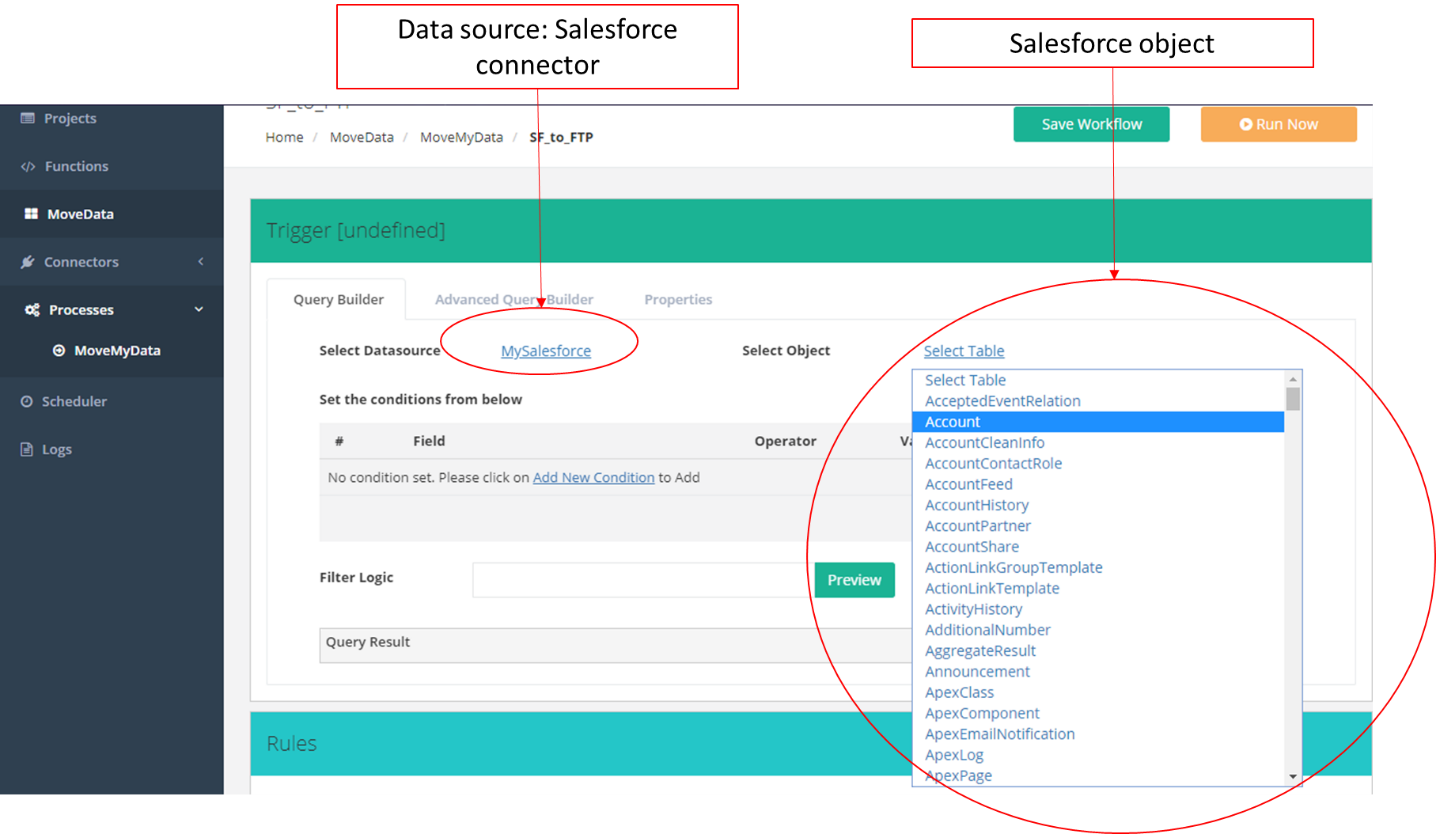

We need now to configure a trigger, which will contain a query to Salesforce. Figure 14 shows how to do this.

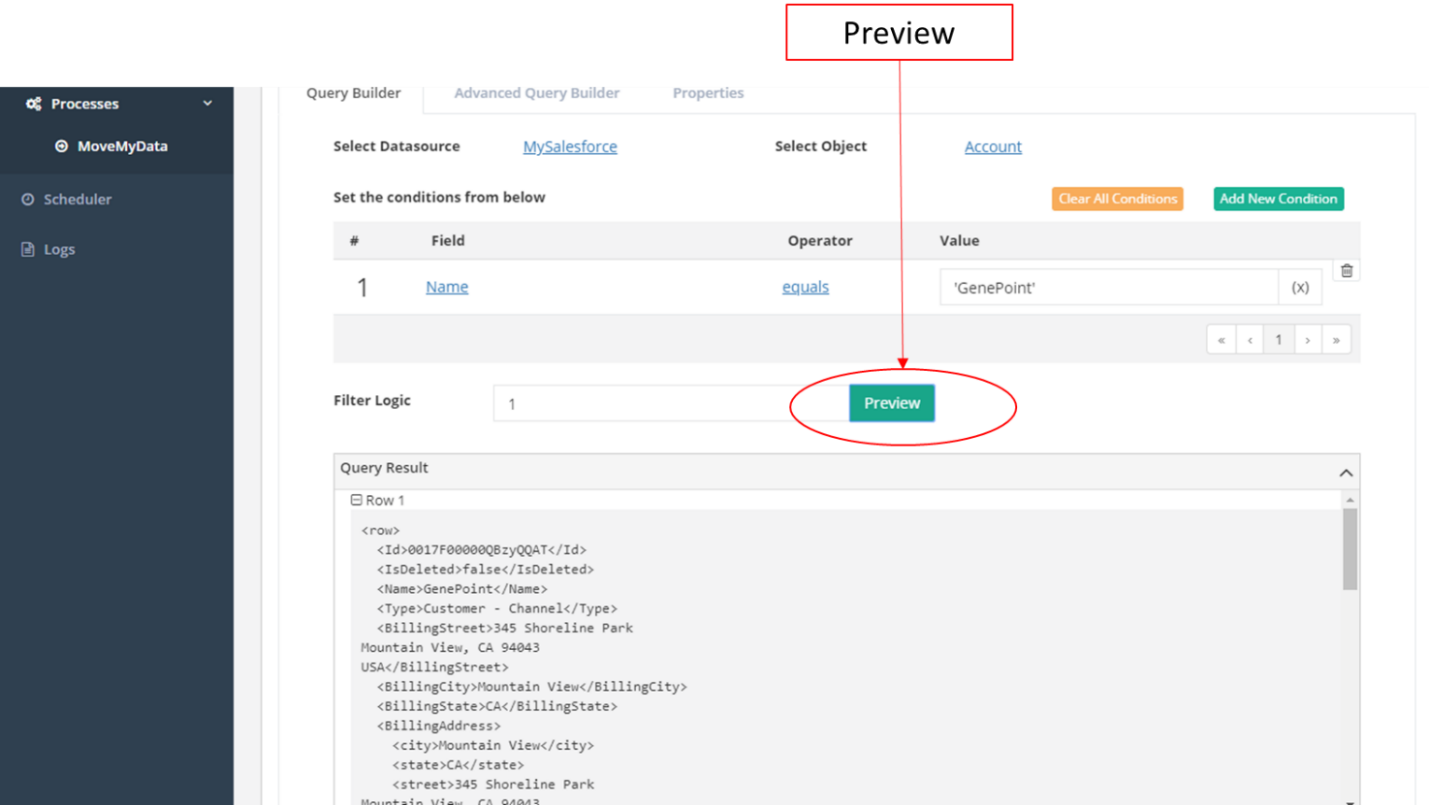

Once we have constructed our query, we can check the results by clicking on the Preview button. The results of the query will be displayed under the Query Result section.

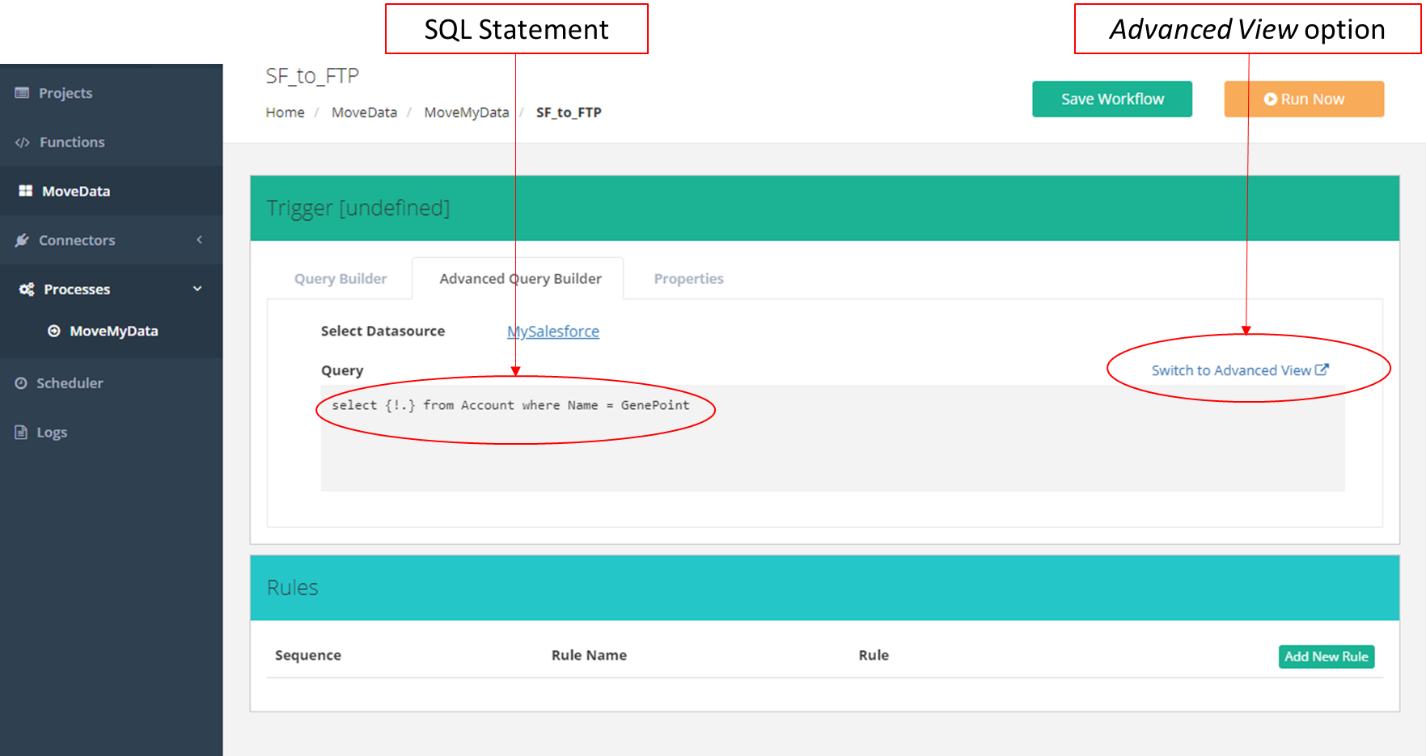

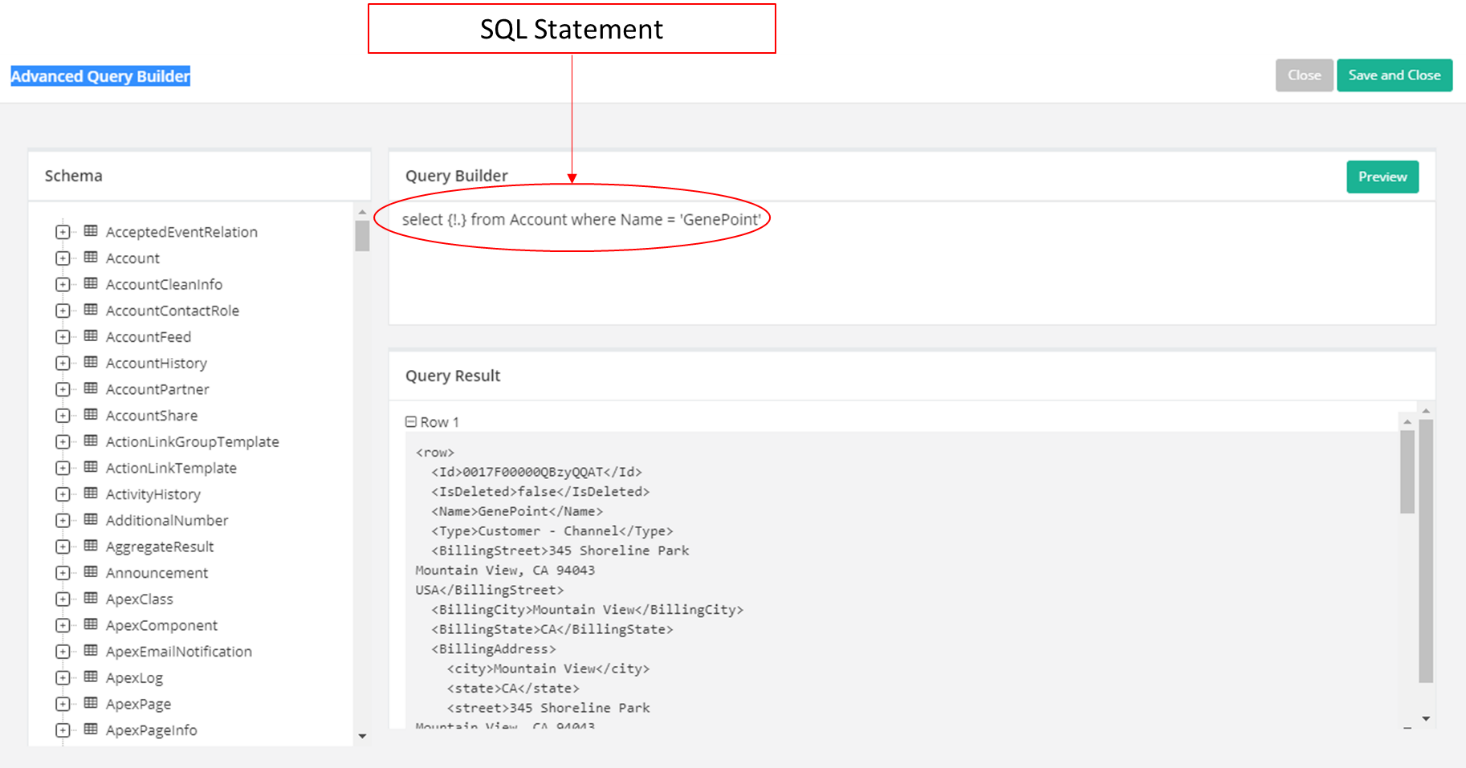

We can also use the Advanced Query Builder. This option presents us with the possibility of directly working with the SQL query statement, manually making the necessary modifications. In order to do this, we must click on Switch to Advanced View.

Furthermore, we can click on the Preview button and check if the result of the query is what we’re expecting. Once we are happy with our modification, we can Save and Close, or if we don’t want to change the original query, we can simply Close.

Step 7: Create a rule.

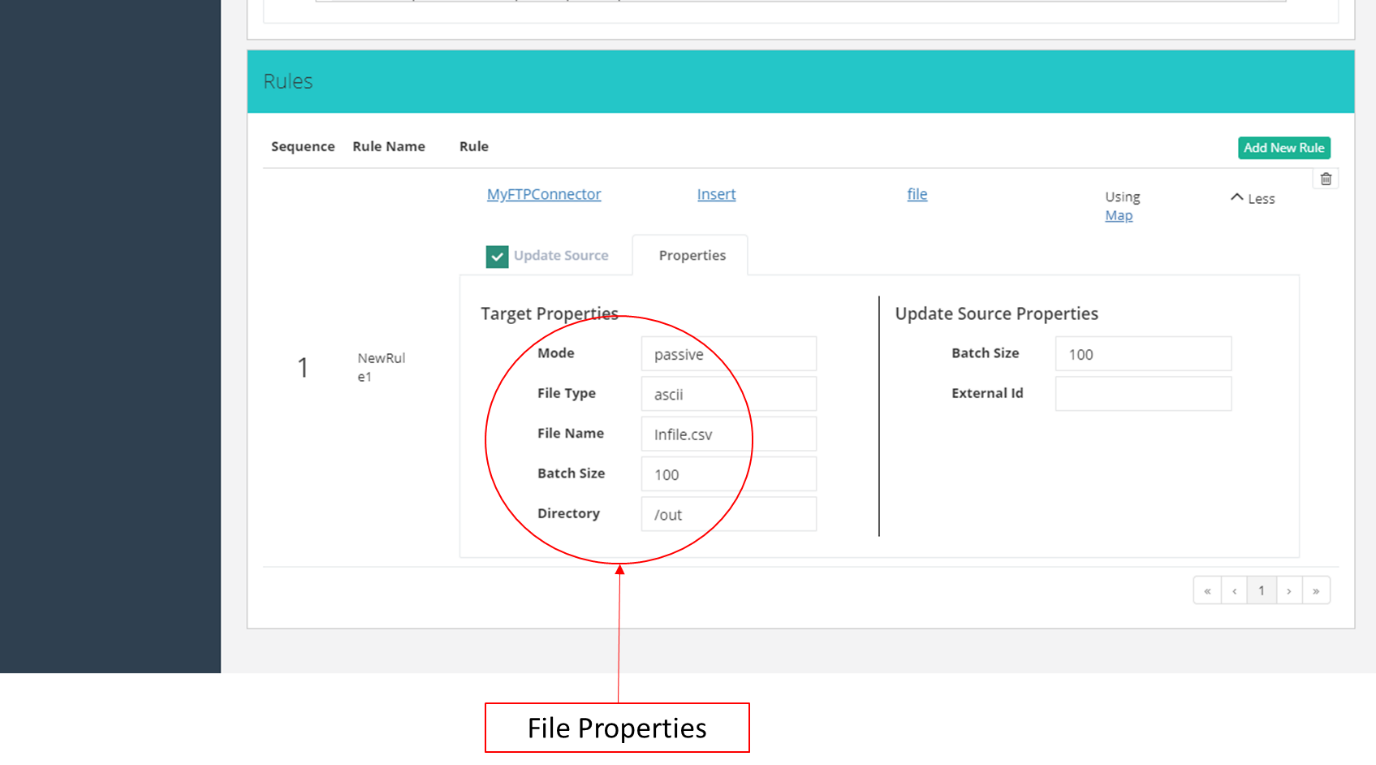

In the Rules section we define the properties of the file(s) that will store the results of our query. In our case we leave Mode default’s value (passive), we need an ASCII file type to store our records, and we define the name of the file that will contain the records as “infile.csv”. The Batch field defines the number of records that will be processed at once. Finally, we write the name of the directory that will contain our file(s).

Note: A practical way to name the file(s) is by including the date. In this case, the name syntax is “infile_{0,date,dd-MM-yyyy-HHmmss}.csv”.

Step 8: Define a mapping

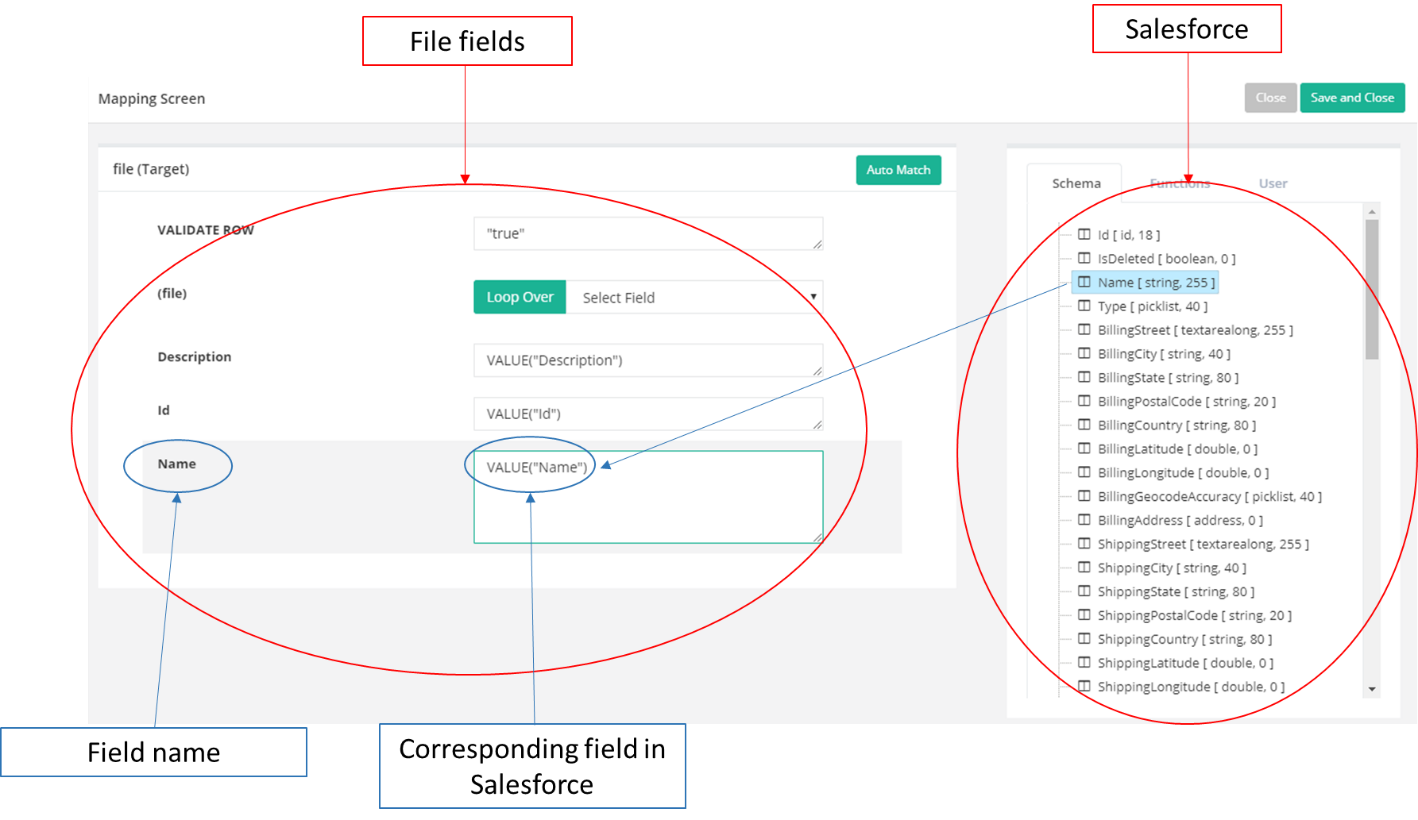

A mapping describes the relationship between the fields in the FTP server and the fields in the application. In our case, need to link the fields in Salesforce to the fields in our target file. Figure 20 shows how to do this.

Step 9: Configure the Post Processing.

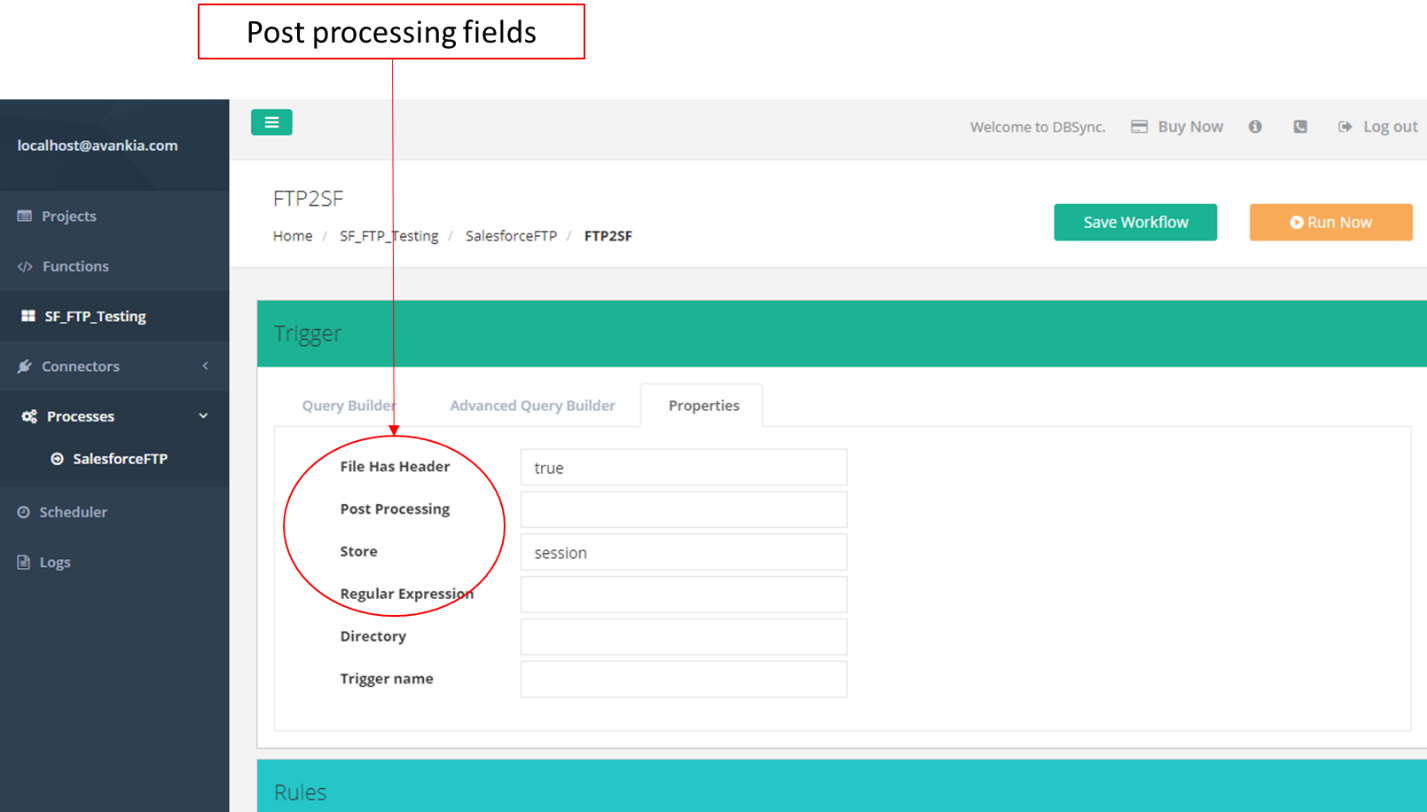

Post processing allows us to configure actions to be taken after the process has been completed. The action can be any operating system command, or an executable file. The command may include the name of a file, which is defined by a regular expression. If the File Has Header field is set to True, then the operation includes header details in the result. The fields Directory and Trigger Name must be left blank. In our example we won’t include any post-processing instruction.

Wrapping up Part 2

In this part 2 of this series of three articles, we have shown how to create a process, a workflow, a trigger, a rule, and a mapping, and to configure the post processing.

In the next article, we will run this project and see the results.

We would be delighted to discuss your use case and explore how DBSync can support your success. Please feel free to Schedule a meeting with us.